Wayland Apps in WireGuard Docker Containers

Running WireGuard in a Docker container can be a convenient way to isolate a WireGuard network from the rest of a system. We’ve covered a variety of different patterns for using WireGuard in containers in the past; in this article we’ll dive deep into one particular pattern: using GUI (Graphical User Interface) Linux applications inside Docker containers to access remote sites through WireGuard.

We’ll build on the core “Container Network” technique covered previously, where we use a shared network namespace to allow other containers to access the WireGuard network of a dedicated WireGuard container. In this case, we’ll build and run several custom containers with a couple of GUI applications, allowing their GUIs to be used seamlessly as part of the container host’s Wayland display — while still being able to access remote servers through the isolated WireGuard network of the WireGuard container.

We’ll work our way up to running a full-fledged Firefox browser with video and audio in a container to access an isolated WireGuard network, but first we’ll cover a number of intermediate steps before we get there:

-

Set up our Docker Projects

-

Set up the WireGuard Network

-

Set up a simple SMB Client

-

Set up an SSH Client with SSH-agent access

-

Set up an RDP Client with Wayland

-

Set up a basic Firefox Client

-

Configure WireGuard Internet Access

-

Update Firefox With Video & Audio support

-

Add miscellaneous Firefox Extras

Docker Projects

For convenience, we will use Docker Compose to manage our containers, using two separate compose files:

-

~/containers/wg-build/docker-compose.yml, to build the container images -

~/containers/wg-network1/docker-compose.yml, to run the containers

The first project will contain the Dockerfiles and (other miscellaneous files) that we need to build our custom container images; and the second project will contain the files and settings we use at runtime with our WireGuard network (such as the WireGuard configuration files, and the browser profile and cache content).

This will allow us to use the second project as a template for other WireGuard networks that we might want to add in the future; for example, to add a new ~/containers/wg-network-east project to access our Eastern datacenter, and a separate ~/containers/wg-network-west project to access our Western datacenter.

WireGuard Network

First, we’ll set up a simple WireGuard point-to-site network, using the configuration settings from the WireGuard Point to Site Configuration guide:

As shown by that guide, on the remote side of the connection (Host β), we’ll set up a WireGuard interface with the following configuration:

# /etc/wireguard/wg0.conf

# local settings for Host β

[Interface]

PrivateKey = ABBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBFA=

Address = 10.0.0.2/32

ListenPort = 51822

# IP forwarding

PreUp = sysctl -w net.ipv4.ip_forward=1

# IP masquerading

PreUp = iptables -t mangle -A PREROUTING -i wg0 -j MARK --set-mark 0x30

PreUp = iptables -t nat -A POSTROUTING ! -o wg0 -m mark --mark 0x30 -j MASQUERADE

PostDown = iptables -t mangle -D PREROUTING -i wg0 -j MARK --set-mark 0x30

PostDown = iptables -t nat -D POSTROUTING ! -o wg0 -m mark --mark 0x30 -j MASQUERADE

# remote settings for Endpoint A

[Peer]

PublicKey = /TOE4TKtAqVsePRVR+5AA43HkAK5DSntkOCO7nYq5xU=

AllowedIPs = 10.0.0.1/32Start up this WireGuard interface on the remote host as shown in the guide (eg sudo wg-quick up wg0 ).

For the local side of the connection (our personal laptop), we’ll use the configuration for Endpoint A from the guide, saving it as ~/containers/wg-network1/wg/wg0.conf:

# ~/containers/wg-network1/wg/wg0.conf

# local settings for Endpoint A

[Interface]

PrivateKey = AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAEE=

Address = 10.0.0.1/32

ListenPort = 51821

# remote settings for Host β

[Peer]

PublicKey = fE/wdxzl0klVp/IR8UcaoGUMjqaWi3jAd7KzHKFS6Ds=

Endpoint = 203.0.113.2:51822

AllowedIPs = 192.168.200.0/24This WireGuard network will allow the local side of the connection access to the other servers in the 192.168.200.0/24 network address range at Site B.

To run this connection in a Docker container, we’ll add a wg service to our runtime compose file at ~/containers/wg-network1/docker-compose.yml:

# ~/containers/wg-network1/docker-compose.yml

services:

wg:

image: procustodibus/wireguard

cap_add:

- NET_ADMIN

volumes:

- ./wg:/etc/wireguardThen we’ll start up the wg service:

$ cd ~/containers/wg-network1 $ sudo docker compose up wg [+] Running 11/11 ✔ wg Pulled ... [+] Running 1/1 ✔ Container wg-network1-wg-1 Created ... Attaching to wg-1 wg-1 | wg-1 | * /proc is already mounted wg-1 | * /run/lock: creating directory wg-1 | * /run/lock: correcting owner wg-1 | OpenRC 0.54 is starting up Linux 6.5.0-45-generic (x86_64) [DOCKER] wg-1 | wg-1 | * Caching service dependencies ... [ ok ] wg-1 | [#] ip link add wg0 type wireguard wg-1 | [#] wg setconf wg0 /dev/fd/63 wg-1 | [#] ip -4 address add 10.0.0.1/32 dev wg0 wg-1 | [#] ip link set mtu 1420 up dev wg0 wg-1 | [#] ip -4 route add 192.168.200.0/24 dev wg0 wg-1 | * Starting WireGuard interface wg0 ... [ ok ]

The WireGuard connection from our local machine to Site B is now up and running inside the wg-network1-wg-1 container.

SMB Client

As the first step to using this WireGuard connection, we’ll set up a simple SMB client, using the command-line smbclient program.

We’ll build an image for this client with the following Dockerfile:

# ~/containers/wg-build/smb/Dockerfile

FROM alpine:latest

RUN apk add --no-cache \

bash \

samba-client

RUN addgroup al && adduser -D -G al al

USER al

WORKDIR /home/al

CMD bashThe only thing notable about this image is that it creates and will use a custom user account — with a user ID of 1000, to match the UID of our personal user on the container host — allowing us to avoid any file-ownership annoyances when sharing files between the container and the host.

Save this Dockerfile at ~/containers/wg-build/smb/Dockerfile, then add a service for it to our build compose file at ~/containers/wg-build/docker-compose.yml:

# ~/containers/wg-build/docker-compose.yml

services:

smb:

build: smb

image: my-smbAnd build the image:

$ cd ~/containers/wg-build $ sudo docker compose build smb [+] Building ... => => naming to docker.io/library/my-smb => [build] resolving provenance for metadata file

Next, add a service for it to our runtime compose file at ~/containers/wg-network1/docker-compose.yml:

# ~/containers/wg-network1/docker-compose.yml

services:

wg:

image: procustodibus/wireguard

cap_add:

- NET_ADMIN

volumes:

- ./wg:/etc/wireguard

smb:

image: my-smb

network_mode: 'service:wg'

volumes:

- ./smb:/home/alThis new smb service will use the my-smb image we just built, and it will share the wg service’s network namespace (so it can use the WireGuard interface) — plus it will mount the container host’s ~/containers/wg-network1/smb/ directory to the home directory of the custom user (al) within the container.

Before using this container, create this ~/containers/wg-network1/smb/ mount point on the host:

$ cd ~/containers/wg-network1 $ mkdir -p smb

And if we had previously shut down the wg service, make sure to start it back up again:

$ cd ~/containers/wg-network1 $ sudo docker compose up wg ...

Then (in a new terminal) run the smb service:

$ cd ~/containers/wg-network1 $ sudo docker compose run smb [+] Running 1/0 ✔ Container wg-network1-wg-1 Running ... 2a04e264f1da:~$

This will bring up a Bash shell in the wg-network1-smb-1 container. In this shell, we can use the SMB client to connect to an SMB server at the remote site through the WireGuard connection provided by the wg service:

2a04e264f1da:~$ smbclient -U justin '\\192.168.200.33\shares' Password for [WORKGROUP\justin]: Try "help" to get a list of possible commands. smb: \>

Or we can just use the WireGuard connection to ping a server at the remote site:

2a04e264f1da:~$ ping -nc1 192.168.200.22 PING 192.168.200.22 (192.168.200.22): 56 data bytes 64 bytes from 192.168.200.22: seq=0 ttl=42 time=68.573 ms --- 192.168.200.22 ping statistics --- 1 packets transmitted, 1 packets received, 0% packet loss round-trip min/avg/max = 68.573/68.573/68.573 ms

SSH Client

As the next step, we’ll set up an SSH client in a container that can use this WireGuard network and still have access to the SSH agent on the container host. Having access to the host’s SSH agent will enable us to use any SSH keys stored on the host — or on a smart card attached to the host — that we’ve added to the SSH agent.

For this step, we’ll again build a simple image for the client with a custom Dockerfile:

# ~/containers/wg-build/ssh/Dockerfile

FROM alpine:latest

RUN apk add --no-cache \

bash \

openssh-client

RUN addgroup al && adduser -D -G al al && \

mkdir -p /run/user/1000 && \

chmod -R 700 /run/user/1000 && \

chown -R 1000:1000 /run/user/1000

ENV SSH_AUTH_SOCK=/run/user/1000/ssh-agent

USER al

WORKDIR /home/al

CMD bashIn this Dockerfile, however, after we create a user account with a UID of 1000 (al), we’ll also set up the standard /run/user/1000/ runtime directory for user-owned socket files — and set the container’s SSH_AUTH_SOCK environment variable to point at a file in that directory. While nothing is in that directory at this point, later on (at runtime) we’ll map the host’s SSH-agent socket file into it at this specific path.

Save this Dockerfile at ~/containers/wg-build/ssh/Dockerfile, then add a service for it to our build compose file at ~/containers/wg-build/docker-compose.yml:

# ~/containers/wg-build/docker-compose.yml

services:

smb:

build: smb

image: my-smb

ssh:

build: ssh

image: my-sshAnd build the image:

$ cd ~/containers/wg-build $ sudo docker compose build ssh [+] Building ... => => naming to docker.io/library/my-ssh => [build] resolving provenance for metadata file

Next, add a service for it to our runtime compose file at ~/containers/wg-network1/docker-compose.yml:

# ~/containers/wg-network1/docker-compose.yml

services:

wg:

image: procustodibus/wireguard

cap_add:

- NET_ADMIN

volumes:

- ./wg:/etc/wireguard

smb:

image: my-smb

network_mode: 'service:wg'

volumes:

- ./smb:/home/al

ssh:

image: my-ssh

network_mode: 'service:wg'

volumes:

- $SSH_AUTH_SOCK:/run/user/1000/ssh-agent

- ./ssh:/home/alThis service definition for the ssh service is similar to that of the SMB Client, except that we have added an additional volume. We use this additional volume definition to map our actual SSH-agent socket file on the host to the /run/user/1000/ssh-agent socket file referenced in the my-ssh image’s Dockerfile.

|

Note

|

Run the following command in a terminal on the host to see where the SSH-agent socket file on your host lives: If this environment variable is empty, you’re probably not running an SSH agent. |

Mapping the SSH-agent socket file like this enables us to use the SSH agent from the host inside the container.

Now we can start up the ssh service. Run the following commands (in a new terminal) to create the ~/containers/wg-network1/ssh/ mount point and start up an ssh container:

$ cd ~/containers/wg-network1 $ mkdir -p ssh $ sudo docker compose run ssh [+] Running 1/0 ✔ Container wg-network1-wg-1 Running ... cc574a6ef3af:~$

This will bring up a Bash shell in a new wg-network1-ssh-1 container. If we run the ssh-add -L command in this new container, we should see all the SSH keys that we’ve added to the SSH agent on the host:

cc574a6ef3af:~$ ssh-add -L sk-ssh-ed25519@openssh.com AAAAGnNrLXNzaC1lZDI1NTE5QG9wZW5zc2guY29tAAAAIO5k/uLhCT7OLR/S048kPicX6LQ9CUgJugjSjPxGq3XmAAAABHNzaDo= justin@myws

And we should be able to use those SSH keys to SSH through WireGuard into any servers at Site B that accept them:

cc574a6ef3af:~$ ssh justin@192.168.200.22 Last login: Tue Oct 1 18:07:25 2024 from 192.168.200.33 justin@www:~$

Note that because we’ve mounted the host’s ~/containers/wg-network1/ssh/ directory to the ssh service’s /home/al/ directory, we can use the host’s ~/containers/wg-network1/ssh/.ssh/ subdirectory to configure SSH in the container. For example, if configure the ~/containers/wg-network1/ssh/.ssh/config file like the following:

# ~/containers/wg-network1/ssh/.ssh/config

Host www-server

HostName 192.168.200.22

User justinThen in the ssh container, we can simply run the following command to SSH into the 192.168.200.22 host as the justin user:

cc574a6ef3af:~$ ssh www-server

Last login: Tue Oct 1 22:10:53 2024 from 192.168.200.2

justin@www:~$RDP Client

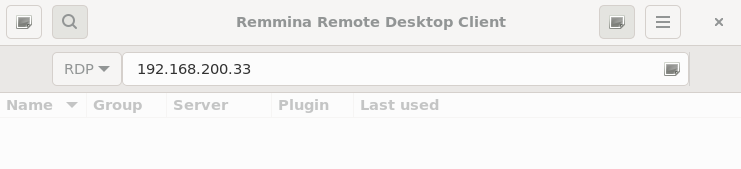

Now we’re ready to set up our first GUI app in a Docker container. For this step, we’ll set up Remmina, which provides a GUI for connecting to a remote server over RDP. To enable the Remmina GUI to work, we’ll need to use the same trick that we used above to pass the host’s SSH-agent socket to the SSH Client — but this time we’ll need to pass the host’s Wayland-display socket.

|

Note

|

This guide assumes you’re running Wayland on the host. If you are instead using X11, the same principle applies, but with a few different settings. See the x11docker project wiki for more information. If you’re unsure as to whether or not you’re running Wayland, run the following command on the host: If this environment variable is empty, you’re probably not running Wayland. |

For this step, we’ll build an image for Remmina (and its RDP plugin) using a custom Dockerfile:

# ~/containers/wg-build/rdp/Dockerfile

FROM ubuntu:22.04

ARG APT_GET="apt-get -qq -o=Dpkg::Use-Pty=0 --no-install-recommends" \

DEBIAN_FRONTEND=noninteractive \

DEBIAN_PRIORITY=critical

ENV LANG=en_US

RUN ${APT_GET} update && ${APT_GET} install \

remmina \

remmina-plugin-rdp \

&& rm -rf /var/lib/apt/lists/*

RUN adduser ubuntu && \

mkdir -p /run/user/1000 && \

chmod -R 700 /run/user/1000 && \

chown -R 1000:1000 /run/user/1000

ENV WAYLAND_DISPLAY=wayland-0 \

XDG_RUNTIME_DIR=/run/user/1000

USER ubuntu

WORKDIR /home/ubuntu

CMD remminaLike with the Dockerfile from the SSH Client, after we create a user account with a UID of 1000 (this time named ubuntu), we’ll also set up the standard /run/user/1000/ runtime directory for user-owned socket files. In this Dockerfile, however, we’ll set the container’s XDG_RUNTIME_DIR environment variable to point to that directory; and we’ll also set the container’s WAYLAND_DISPLAY environment variable. Together, they’ll signal to programs running in the container that they can use a socket file at /run/user/1000/wayland-0 in the container to connect to Wayland.

|

Note

|

The version of Remmina packaged with Ubuntu 24.04 seems to work only with X11 — so we’re deliberately using Ubuntu 22.04 as the base image for this Dockerfile, as its remmina package works nicely with Wayland.

|

Save this Dockerfile at ~/containers/wg-build/rdp/Dockerfile, then add a service for it to our build compose file at ~/containers/wg-build/docker-compose.yml:

# ~/containers/wg-build/docker-compose.yml

services:

...

ssh:

build: ssh

image: my-ssh

rdp:

build: rdp

image: my-rdpAnd build the image:

$ cd ~/containers/wg-build $ sudo docker compose build rdp [+] Building ... => => naming to docker.io/library/my-rdp => [build] resolving provenance for metadata file

Next, add a service for it to our runtime compose file at ~/containers/wg-network1/docker-compose.yml:

# ~/containers/wg-network1/docker-compose.yml

services:

wg:

image: procustodibus/wireguard

cap_add:

- NET_ADMIN

volumes:

- ./wg:/etc/wireguard

...

ssh:

image: my-ssh

network_mode: 'service:wg'

volumes:

- $SSH_AUTH_SOCK:/run/user/1000/ssh-agent

- ./ssh:/home/al

rdp:

image: my-rdp

network_mode: 'service:wg'

volumes:

- $XDG_RUNTIME_DIR/$WAYLAND_DISPLAY:/run/user/1000/wayland-0

- ./rdp:/home/ubuntuLike the SSH Client, we use the rdp service definition to map a socket file from the host into a container. With the rdp service, however, we’re mapping the Wayland-display socket file; which we map from the host to the container’s /run/user/1000/wayland-0 path. Mapping the Wayland-display socket file like this enables Remmina, running in the container, to interact with our Wayland display, running on the host.

If we now run the rdp service in a new terminal, this will start up Remmina, and open up its main window in the host’s Wayland display:

$ cd ~/containers/wg-network1 $ mkdir -p rdp $ sudo docker compose run rdp ...

Which allows us to use Remmina’s GUI to RDP through WireGuard to a server in Site B:

And because we mapped the host’s ~/containers/wg-network1/rdp directory to the home directory of the container’s user, all the container’s Remmina preferences (and cache data) are stored there:

$ tree -a ~/containers/wg-network1/rdp /home/justin/containers/wg-network1/rdp ├── .cache │ ├── org.remmina.Remmina │ │ └── latest_news.md │ └── remmina │ ├── group_rdp_shares_192-168-200-33.remmina.state │ └── remmina.pref.state ├── .config │ ├── freerdp │ │ ├── certs │ │ ├── known_hosts2 │ │ └── server │ ├── gtk-3.0 │ └── remmina │ └── remmina.pref └── .local └── share ├── recently-used.xbel └── remmina └── group_rdp_shares_192-168-200-33.remmina 13 directories, 7 files

Firefox Client

Now we’re ready to set up Firefox in a Docker container. Just like with the RDP Client above, we’ll pass the host’s Wayland-display socket to the container through a volume mount point, allowing Firefox, running in a container, to use the host’s display.

And like with the RDP Client, we’ll also build a Docker image for Firefox with a custom Dockerfile:

# ~/containers/wg-build/ff/Dockerfile

FROM ubuntu:24.04

ARG APT_GET="apt-get -qq -o=Dpkg::Use-Pty=0 --no-install-recommends" \

DEBIAN_FRONTEND=noninteractive \

DEBIAN_PRIORITY=critical

ENV LANG=en_US

RUN ${APT_GET} update && ${APT_GET} install ca-certificates

COPY conf/packages.mozilla.org.asc /etc/apt/keyrings/packages.mozilla.org.asc

COPY conf/mozilla.list /etc/apt/sources.list.d/mozilla.list

COPY conf/mozilla-pin /etc/apt/preferences.d/mozilla-pin

RUN ${APT_GET} update && ${APT_GET} install \

firefox \

&& rm -rf /var/lib/apt/lists/*

RUN mkdir -p /run/user/1000 && \

chmod -R 700 /run/user/1000 && \

chown -R 1000:1000 /run/user/1000

ENV WAYLAND_DISPLAY=wayland-0 \

XDG_RUNTIME_DIR=/run/user/1000

USER ubuntu

WORKDIR /home/ubuntu

CMD firefoxThis Dockerfile is quite similar to the one for the RDP Client, differing only in that:

-

We use the latest Ubuntu LTS (24.04)

-

We install the

firefoxpackage instead of theremminapackages

We add the Mozilla repositories to avoid the Snapcraft packages used by default in Ubuntu for Firefox. Save the configuration files needed for the Mozilla repositories in the ~/containers/wg-build/ff/conf/ directory:

# ~/containers/wg-build/ff/conf/packages.mozilla.org.asc

-----BEGIN PGP PUBLIC KEY BLOCK-----

xsBNBGCRt7MBCADkYJHHQQoL6tKrW/LbmfR9ljz7ib2aWno4JO3VKQvLwjyUMPpq

/SXXMOnx8jXwgWizpPxQYDRJ0SQXS9ULJ1hXRL/OgMnZAYvYDeV2jBnKsAIEdiG/

e1qm8P4W9qpWJc+hNq7FOT13RzGWRx57SdLWSXo0KeY38r9lvjjOmT/cuOcmjwlD

T9XYf/RSO+yJ/AsyMdAr+ZbDeQUd9HYJiPdI04lGaGM02MjDMnx+monc+y54t+Z+

ry1WtQdzoQt9dHlIPlV1tR+xV5DHHsejCZxu9TWzzSlL5wfBBeEz7R/OIzivGJpW

QdJzd+2QDXSRg9q2XYWP5ZVtSgjVVJjNlb6ZABEBAAHNVEFydGlmYWN0IFJlZ2lz

dHJ5IFJlcG9zaXRvcnkgU2lnbmVyIDxhcnRpZmFjdC1yZWdpc3RyeS1yZXBvc2l0

b3J5LXNpZ25lckBnb29nbGUuY29tPsLAjgQTAQoAOBYhBDW6oLM+nrOW9ZyoOMC6

XObcYxWjBQJgkbezAhsDBQsJCAcCBhUKCQgLAgQWAgMBAh4BAheAAAoJEMC6XObc

YxWj+igIAMFh6DrAYMeq9sbZ1ZG6oAMrinUheGQbEqe76nIDQNsZnhDwZ2wWqgVC

7DgOMqlhQmOmzm7M6Nzmq2dvPwq3xC2OeI9fQyzjT72deBTzLP7PJok9PJFOMdLf

ILSsUnmMsheQt4DUO0jYAX2KUuWOIXXJaZ319QyoRNBPYa5qz7qXS7wHLOY89IDq

fHt6Aud8ER5zhyOyhytcYMeaGC1g1IKWmgewnhEq02FantMJGlmmFi2eA0EPD02G

C3742QGqRxLwjWsm5/TpyuU24EYKRGCRm7QdVIo3ugFSetKrn0byOxWGBvtu4fH8

XWvZkRT+u+yzH1s5yFYBqc2JTrrJvRU=

=QnvN

-----END PGP PUBLIC KEY BLOCK-----# ~/containers/wg-build/ff/conf/mozilla.list

deb [signed-by=/etc/apt/keyrings/packages.mozilla.org.asc] https://packages.mozilla.org/apt mozilla main# ~/containers/wg-build/ff/conf/mozilla-pin

Package: *

Pin: origin packages.mozilla.org

Pin-Priority: 1000And add a service to build the Dockerfile to our build compose file at ~/containers/wg-build/docker-compose.yml:

# ~/containers/wg-build/docker-compose.yml

services:

...

rdp:

build: rdp

image: my-rdp

ff:

build: ff

image: my-ffThen build the image:

$ cd ~/containers/wg-build $ sudo docker compose build ff [+] Building ... => => naming to docker.io/library/my-ff => [build] resolving provenance for metadata file

Next, add a service for it to our runtime compose file at ~/containers/wg-network1/docker-compose.yml:

# ~/containers/wg-network1/docker-compose.yml

services:

wg:

image: procustodibus/wireguard

cap_add:

- NET_ADMIN

volumes:

- ./wg:/etc/wireguard

...

rdp:

image: my-rdp

network_mode: 'service:wg'

volumes:

- $XDG_RUNTIME_DIR/$WAYLAND_DISPLAY:/run/user/1000/wayland-0

- ./rdp:/home/ubuntu

ff:

image: my-ff

network_mode: 'service:wg'

volumes:

- $XDG_RUNTIME_DIR/$WAYLAND_DISPLAY:/run/user/1000/wayland-0

- ./ff:/home/ubuntuThe service definition for Firefox is almost exactly the same as the RDP Client — all that’s different is we’re using the my-ff image, and we’re mapping the home folder of container’s ubuntu user to the host’s ff/ directory.

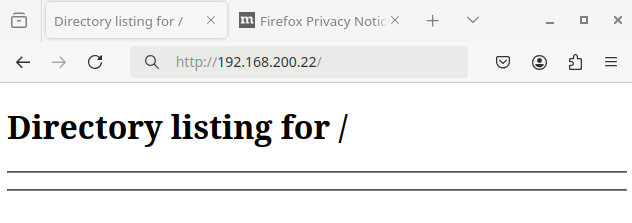

If we run the ff service in a new terminal, this will start up Firefox, and open up a new browser window in our Wayland display:

$ cd ~/containers/wg-network1 $ mkdir -p ff $ sudo docker compose run ff ...

We can then browse any private webservers running in Site B, using the wg service’s WireGuard connection:

All the profile settings and other data for this Firefox instance will be stored in the *.default-release/ subdirectory of the host’s ~/containers/wg-network1/ff/.mozilla/firefox/ directory. The prefix for this subdirectory is generated randomly when Firefox creates the profile; in this example, the subdirectory is mrnlwsve.default-release/:

$ ls -1 ~/containers/wg-network1/ff/.mozilla/firefox 6wjegbdk.default 'Crash Reports' installs.ini mrnlwsve.default-release 'Pending Pings' profiles.ini

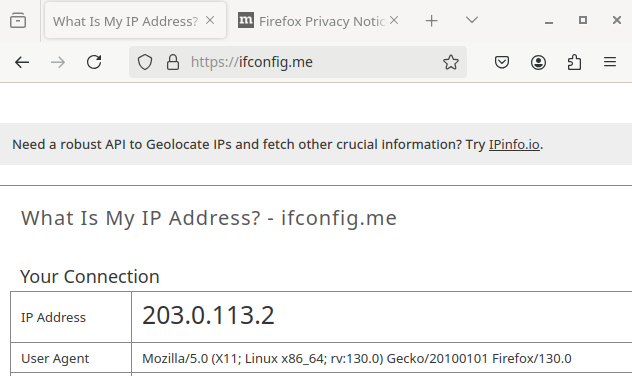

WireGuard Internet Access

If we want to use the Firefox Client to access any server on the Internet through its WireGuard connection (instead of merely any server in Site B), we need to make a couple of changes to the local side of the WireGuard Network we set up above.

First, we need to change the AllowedIPs setting in our local WireGuard configuration at ~/containers/wg-network1/wg/wg0.conf to send all IPv4 traffic (0.0.0.0/0) through the WireGuard connection:

# ~/containers/wg-network1/wg/wg0.conf

# local settings for Endpoint A

[Interface]

PrivateKey = AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAEE=

Address = 10.0.0.1/32

ListenPort = 51821

DNS = 9.9.9.9

# remote settings for Host β

[Peer]

PublicKey = fE/wdxzl0klVp/IR8UcaoGUMjqaWi3jAd7KzHKFS6Ds=

Endpoint = 203.0.113.2:51822

AllowedIPs = 0.0.0.0/0And if we also want to send all DNS traffic through the WireGuard connection, we’ll also need to add a DNS setting to it, configuring it to use a DNS server available from the remote side of the connection (like the 9.9.9.9 Quad9 DNS server).

Next, we need to turn on the net.ipv4.conf.all.src_valid_mark kernel parameter for the wg service (otherwise the WireGuard startup script run inside the container will fail):

# ~/containers/wg-network1/docker-compose.yml

services:

wg:

image: procustodibus/wireguard

cap_add:

- NET_ADMIN

sysctls:

- net.ipv4.conf.all.src_valid_mark=1

volumes:

- ./wg:/etc/wireguard

...After making those changes, close all the windows of the GUI apps that are using the wg service’s network namespace, and shut down all the services of the runtime compose file; then start them up again:

$ cd ~/containers/wg-network1 $ sudo docker compose down [+] Running 2/2 ✔ Container wg-network1-wg-1 Removed ... ✔ Network wg-network1 Removed ... $ sudo docker compose up -d wg [+] Running 2/2 ✔ Network wg-network1 Created ... ✔ Container wg-network1-wg-1 Created ... $ sudo docker compose run -d ff [+] Running 1/1 ✔ Container wg-network1-wg-1 Running ...

The WireGuard container — and all the other containers defined by the ~/containers/wg-network1/docker-compose.yml file to share the same network namespace — will now send all its IPv4 Internet traffic and DNS queries through Host β at the remote site:

|

Tip

|

If you’re having trouble accessing the Internet, make sure you’ve configured the firewall on the remote side of the WireGuard connection (Host β) to masquerade WireGuard traffic, as well as to allow all traffic forwarded from the WireGuard interface to go out through its Internet-facing network interface. If you’re struggling with this, and are trying to use WireGuard in a container on the remote host, the Use for Site Masquerading section of the Building, Using, and Monitoring WireGuard Containers will show you exactly how to do this. Otherwise, if you’re using an iptables-based firewall on the remote host (with firewall rules similar to those shown in the Configure Firewall on Host β section of the WireGuard Point to Site Configuration guide), make sure you include a rule like the following in the host’s Or if you’re using an nftables-based firewall on the remote host (with firewall rules similar to those shown in the Point to Site section of the WireGuard Nftables Configuration guide), make sure you include a rule like the following in the host’s |

Firefox With Video & Audio

If we want our containerized Firefox to play audio — plus be able to transmit audio and video from the host (so that, for example, we can use a videoconferencing webapp) — we need to add a few more packages to our custom Firefox container image, as well as to pass PulseAudio‘s socket to the Firefox container at runtime.

First, edit the Dockerfile for the Firefox Client to add the ffmpeg and libpulse0 packages to it; and also to change the mkdir command that creates the /run/user/1000/ directory to simultaneously create the /run/user/1000/pulse/ subdirectory:

# ~/containers/wg-build/ff/Dockerfile

FROM ubuntu:24.04

ARG APT_GET="apt-get -qq -o=Dpkg::Use-Pty=0 --no-install-recommends" \

DEBIAN_FRONTEND=noninteractive \

DEBIAN_PRIORITY=critical

ENV LANG=en_US

RUN ${APT_GET} update && ${APT_GET} install ca-certificates

COPY conf/packages.mozilla.org.asc /etc/apt/keyrings/packages.mozilla.org.asc

COPY conf/mozilla.list /etc/apt/sources.list.d/mozilla.list

COPY conf/mozilla-pin /etc/apt/preferences.d/mozilla-pin

RUN ${APT_GET} update && ${APT_GET} install \

ffmpeg \

firefox \

libpulse0 \

&& rm -rf /var/lib/apt/lists/*

RUN mkdir -p /run/user/1000/pulse && \

chmod -R 700 /run/user/1000 && \

chown -R 1000:1000 /run/user/1000

ENV WAYLAND_DISPLAY=wayland-0 \

XDG_RUNTIME_DIR=/run/user/1000

USER ubuntu

WORKDIR /home/ubuntu

CMD firefoxThis will add PulseAudio support to the image, as well as to set it up to receive the PulseAudio socket that we will pass from the host to the container’s /run/user/1000/pulse/ directory at runtime.

|

Note

|

The ffmpeg package isn’t actually needed per se for video or audio support — however, it conveniently pulls in a number of common video and audio codecs that may be needed to play the video or audio streams from various websites.

|

Rebuild the ff image with our updates:

$ cd ~/containers/wg-build $ sudo docker compose build ff [+] Building ... => => naming to docker.io/library/my-ff => [build] resolving provenance for metadata file

Then edit the ff service in our runtime compose file to add another volume mapping; this volume mapping will share the host’s PulseAudio socket with the container:

# ~/containers/wg-network1/docker-compose.yml

services:

wg:

image: procustodibus/wireguard

cap_add:

- NET_ADMIN

sysctls:

- net.ipv4.conf.all.src_valid_mark=1

volumes:

- ./wg:/etc/wireguard

...

ff:

image: my-ff

network_mode: 'service:wg'

volumes:

- $XDG_RUNTIME_DIR/pulse/native:/run/user/1000/pulse/native

- $XDG_RUNTIME_DIR/$WAYLAND_DISPLAY:/run/user/1000/wayland-0

- ./ff:/home/ubuntuMapping the PulseAudio socket file like this will allow the container to send sound to the host (through the host’s speakers) — and it will also allow the container to receive sound from the host (through the host’s microphone).

|

Note

|

This assumes you’re running PulseAudio on the host (and using the default PulseAudio configuration). If you’re doing something different, see the x11docker project wiki for other sound options. |

We don’t actually need to make any changes to the ff service to allow it to display video (it can already send video to the host’s Wayland-display socket). However, if we want to allow the container to receive video from the host’s webcam, we need to map the host’s /dev/video0 device to the same location in the container:

# ~/containers/wg-network1/docker-compose.yml

services:

wg:

image: procustodibus/wireguard

cap_add:

- NET_ADMIN

sysctls:

- net.ipv4.conf.all.src_valid_mark=1

volumes:

- ./wg:/etc/wireguard

...

ff:

image: my-ff

network_mode: 'service:wg'

devices:

- /dev/video0:/dev/video0

volumes:

- $XDG_RUNTIME_DIR/pulse/native:/run/user/1000/pulse/native

- $XDG_RUNTIME_DIR/$WAYLAND_DISPLAY:/run/user/1000/wayland-0

- ./ff:/home/ubuntuNow if we close any windows from the ff service that are running, and restart it, we should be able to use videoconferencing webapps in our isolated Firefox instance:

$ cd ~/containers/wg-network1 $ sudo docker compose run -d ff [+] Running 1/1 ✔ Container wg-network1-wg-1 Running ...

Firefox Extras

Lastly, here are a couple more environment variables for Firefox that you may find useful to set (either at runtime, or to bake into the container image itself):

MOZ_CRASHREPORTER_DISABLE=1-

This will suppress Firefox’s crash-reporter dialog when Firefox starts up after a crash. You won’t need this if Firefox never crashes — but if it does, you may end up in a doom loop of crash-reporter dialogs. This environment variable will break the loop.

TZ=America/Los_Angeles-

This will set your timezone to US Pacific time. By default, web pages running in a containerized Firefox instance will not know what timezone the host is using — and will therefore display dates and times in GMT. If you want them to use your local timezone, set it with this environment variable.

Also, the only font family built into the Firefox container will be Deja Vu — so you may also want to add some additional fonts to the container image:

- Noto

-

This family is intended to provide a glyph for every Unicode character (which Firefox can use to avoid displaying “blank boxes” when a web page’s own font stack doesn’t include the glyphs for certain characters on a web page). The

fonts-noto-*Debian packages will install it. - Microsoft core fonts

-

Many websites include at least one of these fonts in their font stack, expecting that these fonts will be installed on every computer. These fonts can be installed via the

ttf-mscorefonts-installerDebian package — however, they won’t be installed unless you first accept a EULA (End User License Agreement). To accept the EULA programmatically, use thedebconf-set-selectionscommand to mark the EULA as accepted.

And also, you may run across web pages that require WebGL support; the mesa-utils Debian package pulls in the necessary libraries for that.

|

Note

|

This does not actually enable hardware GPU access. To allow the container to access the host’s GPU, you’d also need to 1) map the host’s GPU devices (/dev/dri et al) into the container, 2) add the container’s ubuntu user to the render and video groups, and 3) install the same GPU drivers in the container that the host is using. See the x11docker project wiki for more information.

|

The following version of the Firefox Dockerfile includes all these extras:

# ~/containers/wg-build/ff/Dockerfile

FROM ubuntu:24.04

ARG APT_GET="apt-get -qq -o=Dpkg::Use-Pty=0 --no-install-recommends" \

DEBIAN_FRONTEND=noninteractive \

DEBIAN_PRIORITY=critical

ENV LANG=en_US

RUN ${APT_GET} update && ${APT_GET} install ca-certificates

COPY conf/packages.mozilla.org.asc /etc/apt/keyrings/packages.mozilla.org.asc

COPY conf/mozilla.list /etc/apt/sources.list.d/mozilla.list

COPY conf/mozilla-pin /etc/apt/preferences.d/mozilla-pin

RUN echo ttf-mscorefonts-installer msttcorefonts/accepted-mscorefonts-eula select true | \

debconf-set-selections

RUN ${APT_GET} update && ${APT_GET} install \

ffmpeg \

firefox \

fonts-noto \

fonts-noto-cjk \

fonts-noto-color-emoji \

libpulse0 \

mesa-utils \

ttf-mscorefonts-installer \

&& rm -rf /var/lib/apt/lists/*

RUN mkdir -p /run/user/1000/pulse && \

chmod -R 700 /run/user/1000 && \

chown -R 1000:1000 /run/user/1000

ENV MOZ_CRASHREPORTER_DISABLE=1 \

TZ=America/Los_Angeles \

WAYLAND_DISPLAY=wayland-0 \

XDG_RUNTIME_DIR=/run/user/1000

USER ubuntu

WORKDIR /home/ubuntu

CMD firefox